Why Most Published Health & Fitness Research Findings Are False

Most published health and fitness research findings are false, according to John Ioannidis, Ph.D., a professor in the Department of Hygiene and Epidemiology, University of Ioannina School of Medicine, Ioannina, Greece, and Institute for Clinical Research and Health Policy Studies, Department of Medicine, Tufts-New England Medical Center, Tufts University School of Medicine, Boston, Massachusetts, United States of America.

Although many people tell us to "#trustthescience" Ioannidis has mathematically proven that most of what passes for "science" is not trustworthy. Contrary to the dogma taught in the public indoctrination system, the lab coats don't have a direct line to truth and are not the most honest people on Earth.

ARE THE LAB COATS EMPTY OF TRUTH?

By Pi. from Leiden, Holland - Lab 15 - Lab Coats, CC BY 2.0, https://commons.wikimedia.org/w/index.php?curid=4477452

Why Research Findings are False

Ioannidis explains why most published health and fitness research findings are false:

|

"The probability that a research claim is true may depend on study power and bias, the number of other studies on the same question, and, importantly, the ratio of true to no relationships among the relationships probed in each scientific field. In this framework, a research finding is less likely to be true when the studies conducted in a field are smaller; when effect sizes are smaller; when there is a greater number and lesser preselection of tested relationships; where there is greater flexibility in designs, definitions, outcomes, and analytical modes; when there is greater financial and other interest and prejudice; and when more teams are involved in a scientific field in chase of statistical significance. Simulations show that for most study designs and settings, it is more likely for a research claim to be false than true. Moreover, for many current scientific fields, claimed research findings may often be simply accurate measures of the prevailing bias." |

Let me break that down. Most published health and fitness research findings are false for the following reasons:

- Studies are too small.

- Reported relationships between variables and outcomes are too weak (less than 100% difference in outcome).

- Number of tested relationships are large.

- Researchers have great flexibility in design, definitions, outcomes and methods of data analysis.

- Researchers have financial and other investments in the outcome of the study, biasing the results.

- The fields of health and fitness research include many teams doing research seeking statistically significant relationships between variables and outcomes.

In his paper, Ioannidis shows that most biomedical research is operating in areas with very low pre- and post-study probability for true findings. Indeed, history of science has shown that past research often wasted much resources and effort in fields that yielded absolutely no true information.

The Smaller the Studies, the More Likely the Research Findings are False

Small sample sizes means a smaller power to detect true relationships. Ioannidis explains:

|

"Thus, other factors being equal, research findings are more likely true in scientific fields that undertake large studies, such as randomized controlled trials in cardiology (several thousand subjects randomized) than in scientific fields with small studies, such as most research of molecular predictors (sample sizes 100-fold smaller)." |

Randomized controlled trials (RCTs) are the best of experimental designs, but strength and health RCTs are necessarily small, typically less than 50 people. For example, the 2019 Schoenfeld volume study included only 34 people.

The problem with low powered studies is they can produce either false positive or false negative results.

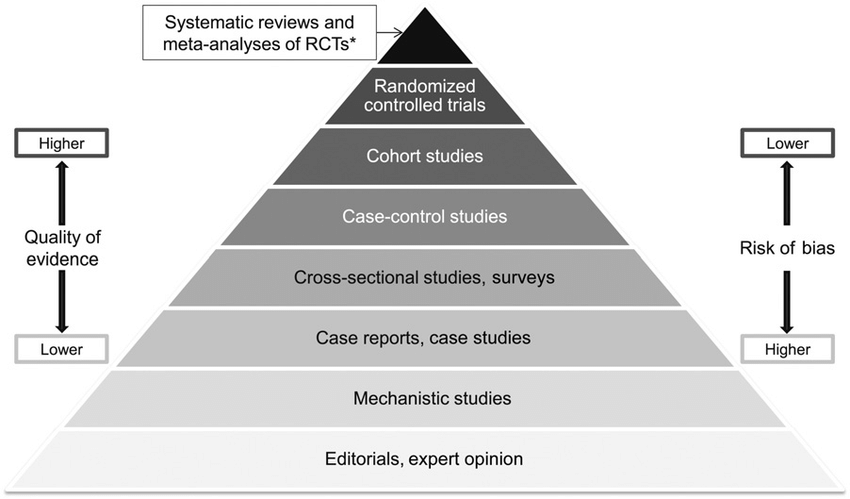

In attempt to overcome the low power of such small studies, those calling themselves scientists will perform meta-analyses, which they allege to be the highest type of evidence.

However, the meta-analysis is only as good as the data base. If all or most of the data comes from studies too small to detect a true relationship and has produced either false positive or false negative relationships due to some other factor––such as investigator bias, which I will discuss below––the meta-analysis simply perpetuates the false data.

In the fields of strength and health, even the meta-analyses are underpowered with too few subjects to detect true relationships. Ioannidis states that a single study will be more likely to detect a true relationship if it includes several thousand subjects. The 2017 Schoenfeld team meta-analysis that alleged to find a dose-response effect of weekly training volume and hypertrophy included 34 treatment groups from 15 studies. The total number of subjects was only 418, too small to produce a reliable result, particularly when you notice that the authors have all clearly expressed bias in favor of high volume training.

RCTs are very costly to conduct. It is highly unlikely that anyone will ever conduct even one randomized controlled trial comparing single set training to multiple set training with several thousand subjects, let alone multiple such studies that would be required to get data that would be suitable for a meta-analysis.

Similarly it is unlikely that anyone will ever conduct even one RCT with several thousand subjects to investigate the outcomes of a carnivore diet, or a ketogenic diet, or any other type of diet. Even if one was done, you could never be certain that the "average" outcomes of the intervention would apply to you.

Weak Relationships Indicate the Research Findings Are False

When the probability that research in some field will find true relationships is low, it is expected that most findings will vary by chance around the null in the absence of bias. If research finds large effects, where the variable is associated with relative risks of an outcome greater than 2, then it is more likely to be true. This occurs in research on the impact of smoking on cancer or cardivascular disease, where smoking increases the risk by 3- to 20- fold.

In contrast, if some research finds the variable is associated with a relative risk of the outcome less than 2, it is unlikely to represent a true relationship. This is what we find in both diet-disease research and resistance training research. For example, studies implicating meat-eating in colon cancer usually find only small risks, such as meat-eaters having a 20-50% greater relative risk. This small risk indicates that the relationship does not exist. Similarly, in resistance training research the differences in outcomes between multiple sets and single sets are minor, indicating that the relationship is not true.

Ioannidis writes:

|

"For example, let us suppose that no nutrients or dietary patterns are actually important determinants for the risk of developing a specific tumor. Let us also suppose that the scientific literature has examined 60 nutrients and claims all of them to be related to the risk of developing this tumor with relative risks in the range of 1.2 to 1.4 for the comparison of the upper to lower intake tertiles. Then the claimed effect sizes are simply measuring nothing else but the net bias that has been involved in the generation of this scientific literature. Claimed effect sizes are in fact the most accurate estimates of the net bias. It even follows that between 'null fields,' the fields that claim stronger effects (often with accompanying claims of medical or public health importance) are simply those that have sustained the worst biases." |

Applied to resistance training research, this means that unless the research shows that changing a variable (volume, frequency, etc) at least doubles the outcome from training, the claimed effect sizes simply estimate the net bias of the researchers.

For example, the 2017 Schoenfeld volume meta-analysis the highest alleged effect size was 0.52, for 10+ sets performed weekly. An effect size of 0.5 is considered moderate, an effect size greater than 0.8 is considered large, but effect sizes can be above 1.0. An effect size of 0.5 means that, allegedly, about 70% of the people in the high set group had greater hypertrophy than the average of the low set group. But how much?

The percentage gains for less than 9 sets weekly was 5.8% and for more than 9 sets was 8.2%. This means that those doing less than 9 sets weekly produced on average about 71% of the average gains rate of those doing more than 9 sets weekly. Hence, doing the greater volume increased the rate of gains by about 29÷71= 41%.

According to Ioannidis, since doing more than 9 sets weekly did not at least double the rate of gains, the data most likely tells us only that those who do resistance training research are biased toward the idea that more volume produces greater results. Similarly, most diet-disease relationship findings are false. For example, eating red meat daily has been associated with increased risks of various diseases, but since the increased risk is less than 100%, the data most likely tells us only that those who do the studies are biased toward the idea that red meat is a risk factor for disease.

Flexibility in Research Design Makes Most Research Findings False

Although meta-analysis is alleged to overcome the limits of small RCTs, it is a controversial statistical procedure. The outcome of a meta-analysis is dependent on the arbitrarily defined criteria and discrimination of the analyst, and most importantly, on the quality of the included studies.

As noted by Carpinelli, meta-analysis is compromised by what statisticians call publication bias or the file drawer effect: Reviewers, editors and publishers are inclined to reject studies that show no difference between interventions and control groups. Since the studies showing null effects are not likely to be published, the published literature is biased toward studies that do show effects.

|

"One important criterion of causality is the strength of any given association. In any reasonably well conducted study, a weak association may be due to confounding or bias, but it is unlikely that a strong association can be completely explained away by defects in study design. That point is critical to the topic of meta-analysis: when associations are strong (as, say, with smoking and lung cancer), there is no need to resort to it. It is when associations are weak that meta-analysis are tempted to combine studies, in the erroneous belief that the statistical significance thereby accomplished translates to causality." |

Thus, people do meta-analyses to tease out "statistical significance" only when the data from RCTs or other research shows weak effects. Thus the very fact that teams have done meta-analyses to attempt to show that multiple-set training is superior to single-set training is in essence a confession that the data is weak; if it wasn't weak, there'd be no need for a meta-analysis. Then, doing the meta-analysis allows those biased to a particular conclusion about strength training or diet to design the study so that it will confirm their biases.

You Aren't Average

Another problem is that most research findings report what happened to the non-existent "average" person in the study. That is, the study was composed of individuals, each having his/her own individual reactions to the intervention (diet or exercise), but the study reports not what happened to the individuals, but on average.

For example, on average the subjects gained X amount of muscle, or lost X amount of fat, or whatever. The problem here is an average does not represent the individual. To simplify, suppose you have a resistance training study with just 10 subjects, who are assigned to 10 sets per exercise, and after 10 weeks the individual lean mass gains are as follows:

- Subject 1: 2 kg

- Subject 2: 0 kg

- Subject 3: –1 kg

- Subject 4: 5 kg

- Subject 5: 0 kg

- Subject 6: 1 kg

- Subject 7: –1 kg

- Subject 8: 1 kg

- Subject 9: 2 kg

- Subject 10: –2 kg

The average lean mass gain produced by this intervention is: 0.7 kg. That value makes it appear that this intervention was a success, in that it produced a lean mass gain. This is in spite of the fact that 2 subjects gained no lean mass, 2 subjects lost a kg, and 1 subject lost 2 kg. Thus, the group can show a gain, while some individuals either gain nothing or even show losses, perhaps from overtraining. The whole group outcome can be skewed by the presence of just one individual who gains an extraordinary amount (5 kg in this example), probably being someone who has a genetic propensity to lean mass gains along with tolerance of the intervention inflicted.

In this case the intervention was really successful for only 20% of subjects and useless for 40% of the subjects, yet it would be deemed successful because of the average gain. Average results are not individual results. The 40% of subjects who got no gains or even had losses on this hypothetical intervention needed something quite different to get lean mass gains.

This is why any resistance training program or dietary approach must be customized to the individual. In either case you must monitor your own response to the intervention and make adjustments.

Since Most Research Findings Are False, Don't Trust the Science!

Modern people have been conditioned by public indoctrination centers to seek knowledge from and trust so-called authorities wearing white coats.

The "authorities" tell us to #trustthescience and they and their minions ridicule anyone who does not trust the process. They call us "science deniers" if we question them or do not believe what tell us.

However, since Ioannidis has mathematically proven that most published research findings are false, there is no science to trust; and if findings are false they should be denied.

Therefore I conclude that those who control the publication of "the science" want you and I to trust the authorities without question so that we don't think or do anything outside the box they have designed for our habitation. They want to control what we think and what we do, for their own profit. It should be obvious. They wouldn't put so much effort and money into controlling what we think through education and media if it did not give them big profits.

The 2019 virus fiasco put this on full display. The 'experts' like Fauci who claimed to be 'the science' did not tell the public how to reinforce their immunity through improving their diet and exercise. They did not tell them about the immune-enhancing effects of sunshine/vitamin D or antiviral properties of vitamin C. Instead, they told the people

- stay inside, which would increase vitamin D deficiency, a risk factor for viral infection

- stay away from other people, despite that social isolation increases inflammation

- you can't go to the gym to build muscle, despite that regular exercise strengthens the immune system

In regards to health, if you just think it through a bit, you will realize that the medical professions and pharmaceutical companies would lose big bucks if they told you how to get and remain healthy and you did it. They have a perverse incentive to keep you unhealthy, because that keeps you in need of medical services and drugs. They don't want you to reverse diabetes, they want you to be on a diabetes medication, along with other meds, for your lifetime. They don't want you to lead a healthy lifestyle that gives you an immune system that prevents viruses from being anything more than a minor bother; they want you on a lifetime of shots and boosters.

In regards to nutrition, the demoniacs controlling the media want you confused. Their favorite method of getting control is by divide and conquer. In politics they divide us up and pit us against one another by presenting binary cons like left-right, democrat-republican, liberal-conservative and communist-capitalist. You choose one con and become the enemy of those who choose the other; while we are preoccupied fighting with one another, no one notices the demonic goons who are enslaving all of us. Similarly, in nutrition they keep people confused and fighting over fat vs. carbs, vegan vs. carnivore, and so on. So long as we are either confused about what to do or fighting with our neighbors, we aren't paying attention to how the demoniacs are controlling the narratives and taking it all to the bank.

Free Yourself From "Science™"

Since "Science™" has few if any trustworthy answers, its time to stop expecting science to provide answers.

The powers that be have trained us not to trust ourselves, and to look to the 'experts' to solve all of our problems for us, but those 'experts' have ulterior motives and don't really care what happens to you. Hence, its time for us all to take responsibility for our own health and fitness.

Recent Articles

-

Ancient Roman Soldier Diet

Apr 14, 25 05:19 PM

A discussion of the ancient Roman soldier diet, its staple foods and nutritional value, and a vegan minimalist version. -

High Protein Chocolate Tofu Pudding

Jul 01, 24 12:41 PM

A delicious high protein chocolate tofu pudding. -

Vegan Macrobiotic Diet For Psoriasis

Sep 05, 23 06:36 PM

Vegan macrobiotic diet for psoriasis. My progress healing psoriasis with a vegan macrobiotic diet. -

How Every Disease Develops

Aug 04, 23 06:22 PM

How every disease develops over time, according to macrobiotic medicine. -

Why Do People Quit Being Vegan?

Jun 28, 23 08:04 PM

Why do people quit being vegan? How peer pressure and ego conspire against vegans. -

Powered By Plants

Mar 16, 23 08:01 PM

Powered By Plants is a book in which I have presented a lot of scientific evidence that humans are designed by Nature for a whole foods plant-based diet. -

Carnism Versus Libertarianism

Dec 30, 22 01:55 PM

Carnism Versus Libertarianism is an e-book demonstrating that carnism is in principle incompatible with libertarianism, voluntaryism, and anarchism. -

The Most Dangerous Superstition Book Review

Nov 15, 22 08:46 PM

Review of the book The Most Dangerous Superstition by Larken Rose.